The Ongoing Journey: Problem Solving in Special Education with iReady Insights

What is RIOT/ICEL and what does it have to do with my vocabulary project??

How it all works?

RIOT: (Review, Interview, Observation, Test)

ICEL–Instruction, Curriculum, Environment, and Learner

The Surprising Way iReady Data Can Transform Student Outcomes

With everything I have to deal with as a special education teacher, why in the world would I ever focus on a student's vocabulary. The answer is quite simple. It impacts EVERYTHING!!!

What does this have to do with planning? Planning for students to make IEP goals is ALL based on data. Read on to see how I start planning for my OG groups by answering the larger questions about what in the world is up with my student's vocabulary scores? Is there anything I can do to increase their vocabulary?

I spend the bulk of my teaching time (like everyone else) on phonemic awareness and phonics with a side of fluency and comprehension.

Yes, vocabulary is built into each listen but is it enough???

I would hazard a guess for this group of students, this project is focusing on, it's not even close to helping them close gaps.

Five facts that prove why this is important

- Improved Communication Skills: A strong vocabulary enables students to express themselves clearly and effectively, helping them articulate their thoughts, ideas, and emotions with confidence. It allows them to engage in meaningful conversations, express their needs and opinions, and actively participate in classroom discussions.

- Reading and Comprehension: As students encounter new words in texts, a rich vocabulary enables them to decipher the meaning of unfamiliar words and understand the overall context. The more words students are familiar with, the better equipped they are to comprehend and enjoy a wide range of written material, expanding their horizons and fostering a love for reading.

- Academic Success: Many subjects, such as language arts, social studies, and science, require students to understand and use specific vocabulary terms. By expanding their vocabulary, elementary students can better understand textbook content, and comprehend instructions. A broad vocabulary also contributes to better writing skills, allowing students to express their ideas fluently and effectively in assignments and essays.

- Critical Thinking and Problem Solving: A diverse vocabulary enhances critical thinking and problem-solving abilities. Vocabulary development fosters cognitive flexibility, enabling students to analyze problems, make connections, and draw conclusions based on the information available to them.

- Increased Confidence and Self-esteem: Building a strong vocabulary instills confidence and boosts self-esteem in elementary students. When children possess a rich vocabulary, they feel more assured in their ability to express themselves and engage in social interactions. They become more comfortable speaking in front of others, advocating for themselves, and participating actively in group activities. This confidence extends beyond the classroom and positively impacts their overall personality development and social interactions.

Science of Reading and Vocabulary

The Science of Reading model recognizes the intricate connection between students' vocabulary and reading development. Vocabulary is a fundamental component of reading comprehension, as understanding the meaning of words is crucial for understanding written text.

In the Science of Reading model, vocabulary instruction is seen as an essential part of teaching reading skills. By explicitly teaching students the meanings of words, word relationships, and word-learning strategies, educators can equip them with the tools necessary to decode unfamiliar words and make connections between words and their meanings.

A strong vocabulary enhances students' ability to comprehend and analyze texts, make inferences, and engage in critical thinking. Furthermore, vocabulary instruction in the Science of Reading model goes beyond isolated word memorization; it focuses on teaching words in context, promoting a deeper understanding of how words are used and their nuances.

Science of Reading, Vocabulary, and Special Education

I have witnessed firsthand how the Science of Reading model of reading and a weak vocabulary can significantly impact students. For students with learning disabilities or language delays, the lack of a solid vocabulary foundation poses immense challenges in their reading journey.

Without a strong vocabulary, students struggle to comprehend texts, decode unfamiliar words, and make meaningful connections between words and their meanings. This weak vocabulary hinders their ability to access grade-level content, understand instructions, and participate fully in classroom activities.

It also affects students' overall confidence and self-esteem, as they may feel frustrated and left behind compared to their peers. As a special education teacher, I recognize the critical importance of addressing vocabulary deficits through explicit instruction, targeted interventions, and multisensory approaches.

By incorporating evidence-based strategies from the Science of Reading model, such as word-learning techniques and vocabulary-building exercises, we can help these students develop a robust vocabulary, overcome reading challenges, and unlock their full potential for academic success.

Which Cliff did I jump off First?

Head first into some Action Research, because I need something that doesn't replace what I'm ready doing but it also has to be evidence-based.But before I jump head-first into setting this idea up … a reality check about why is iReady even a part of my thinking as a Special Education teacher.

How my building and I use iReady:

- It’s dictated by my state and building to use it. Classroom teachers do use the benchmark scores for their yearly professional evaluations as part of their ratings. Most offend beginning of the year to mid-year.

- Teachers do (yet frowned upon & 🙄) use either the Benchmark, Category, or Growth Monitoring score for Read Plans.

- Read Plan cut-scores come from iReady Scaled Scores (students who need to be placed on a Read Plan K-3)

- Our building RTI/MTSS team, lets teachers use the same Read Plan goals for RTI/MTSS goals to help with the workload.

- iReady as a whole is only as good as the student taking it meaning it reflects how a student feels about testing.

- iReady aligns with state standards.

- Specialists and administration look at most of the data from a balcony view, so the whole grade or a whole population of students.

- iReady will pull out program strengths and needs. But it takes time with both a program and using iReady to ensure you have a solid picture to make decisions about.

- Building Interventists use iReady Benchmark to create groups to pull for both reading and math.

A few cons to using iReady Benchmark scores, category, or Growth Monitoring Scores to make decisions.

- iReady Reading will pull out a program’s and grade levels strengths and needs–aka the good, the bad, and the ugly. (ask the question be prepared for the answer even if you don’t like it) & in my case falls way outside of my purview but it has come up in student-specific conversations. (which is toad-ally fun)

- To the best of my knowledge, the Benchmark Data (whole and category-scaled scores) are the only thing that you can do something with on a macro scale. An example: "By iReady mid-years, and given small group phonics instruction, Joey will be able to increase his iReady phonics score from 350 to 400 scaled points."

- As I mentioned in the previous paragraph, if a student’s not feeling it how accurate is it??? Hence the need for a body of evidence when you start talking about needing additional interventions, need to make a course change, or looking at special education testing.

- Using the data from Growth Monitorings is a no-go. The Growth Monitor is designed to be a “dipstick” of how things are going. It’s short and to the point. It doesn’t test across all five domains every time you give it. This means you have a high probability of getting false data. Couple that with making intervention decisions off of and well … off the cliff we go. It also takes at least 4 data points to get a student-specific trend line.

I cannot change how my building uses iReady for intervention progress monitoring. I can only change the progress monitoring tool when the students are brought for me to review or a teacher comes to me with a question about what tool to use.

Teachers and Parents: If you use or see iReady Progress Monitoring for Read Plans or for RTI/MTSS goals, ask yourself, “Is this progress monitoring tool going to give me the information I need to make instructional decisions?” “Is it specific enough to tell me if the student has mastered the skill or not?”

Do Not use it if you don’t have to create goals and as a progress monitoring tool.

What do I do with iReady Data as a Special Education teacher?

I use iReady as a special education teacher as part of their body of evidence. It is part of their whole data story. It is part of the WHOLE STUDENT and is never used as the end-all-be-all of a student.

Why????

Depending on which data set you are looking at within iReady you can only gleam specific information or thoughts around a student or Core instruction.

In my building, the hope is that if the student is in any interventions, you can see it translate back to moving Benchmark scores aka the Student's Annual or Stretch growth. (this also requires additional data not just these data points)

This means iReady should not replace intervention-specific data collection–the mirco data you are collecting on if the intervention is working. iReady will give you notions of carryover.

iReady is the macro the big picture. In my building, iReady is a pretty good predictor of how 3rd-6th grade students will perform on the State Assessment in April. (Yes, this means Spring Benchmark is given after students take the State Assessment. And, yes, I can only speak for my state and building. And our state-reported data has held this idea to be true the last four years–even though COVID–both good and bad.)

Back to how I use this information as part of my data collection for students who see me for reading or math.

- The Fall benchmark is where I look at where my students scored the lowest and the highest. This gives me a gauge as to how students are coming back to school after having 10 weeks off. These scores tend to align with IEP goals and end-of-year progress monitoring data. Such as the Phonics "can dos" matching the CORE phonics survey data.

- Benchmark to Benchmark data look at the percentages it can tell you if students dropped. I use the percentage data more than the Scaled Scores. (If you can always print out the benchmark data.)

- I look at the Can Dos to gain insight into skill breakdowns. These can give ideas as to the next steps and may or may not align with IEP goals. The insights here help me more with math than reading.

- I pull the Diagnostic results for all the grades aka the ultimate balcony view. This is a must for my LD reports and any intervention questions I get. I pull grade-level Scaled Score averages after each Benchmark. I have to report how the student compares to their grade level peers.

- From an RTI/MTSS perspective, the Diagnostic Report, allows you to break down the data to understand if you have a strong core in reading or math and set building or grade-level goals to move students across bands.

- My state and building/district mandate classroom teachers give the Reading growth monitor each month for Read Plan students. It takes at least four data points to get anything useful from the information. (See #7 for more)

- I can assign the Math Growth Monitor. I have in the past given this as part of the monthly progress monitoring data I collect. Like with Reading it takes time to get anything one could call useful and most certainly nothing I would ever set goals using. If, and I do mine if, the student took the assessment seriously I can see if both Core and intervention as working or if they were messing around on a Diagnostic. (like that never happens)

- I can ONLY usefully use the Benchmark numbers to make instructional decisions. This means I can compare Fall to Winter; Winter to Spring; and Fall to Spring. The data from Winter in my building historically, is not reliable as most students drop. (Some a little. Some a lot. That’s a whole different rant for a different time. lol.)

I did promise the good, the bad, and the ugly. This is how we use iReady. Is it the only way, probability not. Are there other reports, things to glime, or things not to do, most likely but this is what I'm going with.

Stay tuned for how I plan to attack this for the coming school year and learn some nuggets that you can take back and use in the fall to build student vocabulary that are research-backed and align with the Science of Reading.

What I use to help me make Data Driven Decisions

I was wrapping up my post-observation meeting with my principal and data came up. He asked, “How did I come to the decision to teach what I did?”

So, I pulled out a copy of my Assessment Data Analysis. I love LOVE using this form. {Catch the video to see how I fill it out and grab your own copy.}

The cool thing about this form is the power, control, and guidance it gives you over your data. It is also open-ended enough to use any pre-assessment you want. Well, within reason.

The data I used was from my Orton-Gillingham groups, their most recent pre-test from my Phonics Progress Monitoring. I assessed them using the Short Vowel Mixed Digraphs.

This Phonogram Progress Monitoring can be used as a Pre and Post assessment.- Teacher Evaluations

- RTI/MTSS Body of Evidence

- Monitoring Progress of Intervention groups

- Mirco IEP Goal Progress

Assessment Data Analysis

This Data Analysis is perfect for RTI/MTSS interventions and Special Education groups or if you have to provide data as part of the teacher evaluation–like me. Bonus administrators love it as you have your thinking right there on paper.

I use this ALL the time. I keep it in each group's binder. This doesn't replace IEP goal progress monitoring but it gets me out of the weeds. I think most of us in Special Education we get caught up in the microdata a little too much and forget to come up for air.

This form allows me to see the group data from a balcony view. Just like my Phonic Progress Monitoring--I can break down where a student is struggling and differentiate my lesson to target more nonsense words or more sentence fluency work or more controlled contented text.

I love that I can catch any misconceptions right from the beginning and not later as I address vowel confusions.

This year part of my professional goal has been to find a way to track growth/mastery using Orton-Gillingham to make having grade-level skill carry-over conversations easier. I don't know about you but my classroom teachers they like to see the data before they make decisions. [I love this as this has been a HUGE RTI and intervention push!!]

I used my Phonics Progress Monitoring Tool.

A couple of important things about my Phonics Progress Monitoring tool

- Yes–I use an Orton-Gillingham scope & sequence to provide explicit phonics instruction to my student education goals but it’s TOTALLY OKAY if you don’t. It will still HELP you determine if students have mastered the phonics phonogram in question.

- It will work with ANY phonics scope and sequence--from Core to Special Education

- This product is bottomless and growing--grab your before it grows

How to Fill out the Assessment Data Analysis

This video will show you how I filled out the form using my Phonics Progress Monitoring Tool but it can be used with any assessment.

Pick an assessment that can be used as a pre-test or baseline and something that is short-lived. Like your next math unit on double-digit addition or subtraction, or next grammar unit or your next phonics unit. Unit quizzes work–just pull something towards the end of the unit or subject. This will help you establish a baseline on most if not all of the standard you will be teaching. (I try to keep mine to either a page or less than 10 questions.)

To use this form you don’t need to have multiple teachers using it.

Give the assessment and grade.

Establish and define Mastery. AKA: what’s that score that tells you the student’s “got it.” (Most of the time I go with 80% but it depends on the skill. For my phonics work, I establish mastery at 90%.) Write down whatever you decide. It will not change for this round.

Starting on the Pre-Assessment side: fill out the date, Unit and Standard(s), Length of the unit (I have found making this less than 5 days sets everyone up.), and Big Ideas.

Moving down the form: add teacher(s) name, the total number of students who took the assessment, the number and percent of students proficient and higher, and the number and percent of students not proficient.

The last three boxes will have student names. This is where you need to know your students and the material that is going to be taught.

First of the last three: write down the names of the student(s) who will likely be proficient by the end of the instructional time meaning those students who are close to proficient.

In the second to last box write the names of the student(s) likely to be proficient by the end of instructional time but who have far to go.

In the last box, write the names of students who will likely not be proficient by the end of the instructional time. These students will need extensive support.

Let me show you how I make this work with a group of students I provide explicit phonics instruction too.

Using this form to make data decisions will help you move your students. Remember: Data doesn't judge. It is what it is. Yes, even my data sucks but it is also a place to start. When I do progress monitoring, I always have someone who asks if it's a test. My answer is always the same. "No. It tells me what we need to work on. What do I need to do to help you."

This is one way to look at data. I'd love to hear how you look at your data.

Chat soon,

PS. Make sure to grab a FREE sample.

Phonics Progress Monitoring

Grab your FREE Digraphs Phonics Progress Monitoring Sample to explode your student's phonics growth.

Thank you!

You have successfully joined our subscriber list.

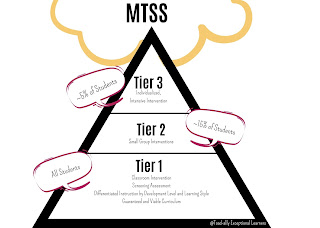

101: MTSS & RTI

What is MTSS?

A Multi-Tiered System of Supports (MTSS) is a framework of team-driven data-based problem solving for improving the outcomes of every student through family, school, and community partnering and a layered continuum of evidence-based practices applied at the classroom, school, district, region, and state level. MTSS is a coherent continuum of evidence-based, system-wide practices to support a rapid response to academic and behavioral needs, with frequent data-based monitoring for instructional decision-making to empower each student to achieve high standards.MTSS models rely on data to assess student needs and help teachers understand which kinds of intervention they need within each tier.

What is Response to Intervention?

Response to Intervention, or RTI, is an educational approach designed to help all learners to succeed, through a combination of high-quality instruction, early identification of struggling students, and responsive, targeted evidence-based interventions to address specific learning needs. RTI uses ongoing progress monitoring and data collection to facilitate data-based decision-making. In addition, the implementation of RTI will assist in the correct identification of learning or other disorders.

In my building, MTSS is the umbrella and RTI falls under it. All students are active participants in MTSS but not all students will be active participants in RTI.

How does RTI work?

It operates on a 3-tiered framework of interventions at increasing levels of intensity. The process begins with high-quality core instruction in the general education classroom. Teachers use a variety of instructional methods to maximize student engagement and learning: modeling of skills, small group instruction, guided practice, independent practice, to name a few.

Through universal screening methods, struggling learners are identified and are given more intense instruction and interventions that are more targeted to individual needs. By giving frequent assessments and analyzing data, teachers make decisions about what levels of intervention will best support student achievement.

What are the Tiers?

Tier I: This is the guaranteed and viable curriculum that all students receive each day within their general education classrooms. It is High quality, research-based core instruction in the general education classroom. All students are given universal screening assessments to ensure that they are progressing and are learning essential skills. {Sidenote: A guaranteed and viable curriculum is one that guarantees equal opportunity for learning for all students. Similarly, it guarantees adequate time for teachers to teach content and for students to learn it. A guaranteed and viable curriculum is one that guarantees that the curriculum being taught is the curriculum being assessed. It is viable when adequate time is ensured to teach all determined essential content.}

Within Tier 1, all students receive high-quality, scientifically based instruction provided by qualified personnel to ensure that their difficulties are not due to inadequate instruction. All students are screened on a periodic basis to establish an academic and behavioral baseline and to identify struggling learners who need additional support. Students identified as being “at-risk” through universal screenings and/or results on state- or district-wide tests receive supplemental instruction during the school day in the regular classroom. The length of time for this step can vary, but it generally should not exceed 8 weeks. During that time, student progress is closely monitored using a validated screening system and documentation method.

Tier II: More intensive, targeted instruction, matched to student needs, is delivered to students who are not making adequate progress in Tier I; they often receive instruction in small groups. They receive progress monitoring weekly, and teachers regularly evaluate data to assess whether students are making progress or need different or more intense intervention.

Targeted Interventions are a part of Tier 2 for students not making adequate progress in the regular classroom in Tier 1 are provided with increasingly intensive instruction matched to their needs on the basis of levels of performance and rates of progress. Intensity varies across group size, frequency and duration of intervention, and level of training of the professionals providing instruction or intervention. These services and interventions are provided in small-group settings in addition to instruction in the general curriculum. In the early grades (kindergarten through 3rd grade), interventions are usually in the areas of reading and math. A longer period of time may be required for this tier, but it should generally not exceed a grading period. Tier II interventions serve approximately 15% of the student population. Students who continue to show too little progress at this level of intervention are then considered for more intensive interventions as part of Tier 3.

Tier III: The most intensive, individualized level of intervention. Students who have not responded to Tier II intervention receive daily, small group or one-on-one instruction. Students in this level often are already receiving special education services, or are referred for further evaluation for special education.

Here students receive individualized, intensive interventions that target the students’ skill deficits. Students who do not achieve the desired level of progress in response to these targeted interventions are then referred for a comprehensive evaluation and considered for eligibility for special education services under the Individuals with Disabilities Education Improvement Act of 2004 (IDEA 2004). The data collected during Tiers 1, 2, and 3 are included and used to make the eligibility decision. This is typically about 5% of your student population.

So what does all of this mean???

What that means is this. A teacher or parent identifies a student’s needs, and they try some interventions. Sounds simple enough, right?

So what’s the problem?

I have a family member who was struggling in reading. Mom talked to the teacher. The Teacher put the child in the RTI reading program. And she made progress and caught up with her peers.

That is the main benefit of RTI. For the right kid, with the right intervention, that’s all they need.

It can also look like a gifted student receiving enrichment in an area of strength like math.

The downside to RTI, it can feel like the school or district is stalling to identify special education needs. Remember, students are general education students first.RTI is a general education progress. It's open to all students who fall below a benchmark. In Colorado, we look at iReady cut scores. Interventions need to be evidenced-based (which doesn’t always happen). This means teachers have to progress monitor students to ensure they are making progress within the selected intervention and if they are not bring them to the building RTI team.

Every building works this process differently. In my building, we ask all teachers who have concerns about students to bring them to the RTI team. This ensures that teachers feel supported, the correct interventions are in place and should the student need to move forward with a special education evaluation the data the team needs is there. We also encourage parents to join the meetings. There is always a follow-up meeting scheduled 6 to 8 weeks out.

IDEA specifically addresses RTI and evaluations.

The 2004 reauthorization of IDEA makes mention of RTI as a method of part of the process of identifying SLD:

- In diagnosing learning disabilities, schools are no longer required to use the discrepancy model. The act states that “a local educational agency shall not be required to take into consideration whether a child has a severe discrepancy between achievement and intellectual ability[…]”

- Response to intervention is specifically mentioned in the regulations in conjunction with the identification of a specific learning disability. IDEA 2004 states, “a local educational agency may use a process that determines if the child responds to scientific, research-based intervention as a part of the evaluation procedures.”

- Early Intervening Services (EIS) are prominently mentioned in IDEA for the first time. These services are directed at interventions for students prior to referral in an attempt to avoid inappropriate classification, which proponents claim an RTI model does. IDEA now authorizes the use of up to 15% of IDEA allocated funds for EIS.

So this is the part where I expect to get pushback. But RTI has been overused and abused. Used to delay Special Education Evaluations and Services. Often.

So much so that the OSEP has put out multiple guidance letters about this.

If your child is in RTI and is doing well, great! I mean it! I am always happy to see a child’s needs being met. However, just have it on your radar that RTI is sometimes used to delay evaluations or IEPs. The old “Let’s try RTI and ‘wait and see.‘ ” Go with your gut. If you believe your child needs an IEP, request IEP evaluations.

Bonus tip: Your child can be going through the IEP evaluation process and receive RTI interventions at the same time!

Parents, how do you know if their children are making progress?

An essential element of RTI is ongoing communication between teachers and parents. As parents, you are kept involved and informed of the process every step of the way, beginning with notification that your child has been identified as struggling in one or more areas and will receive more intensive intervention. If your child receives more targeted instruction in Tier II or Tier III, he or she will be progress monitored frequently. Teachers will share progress monitoring data with you regularly through meetings, phone calls, or emails, as well as progress reports sent home showing assessment data.

When in doubt, ask the teacher for the data.

This is one way the process can look. The big piece for RTI to work is having the process monitoring data so decisions can be made timely.

How I use WHY to Find Root Cause

Why?

How else are you going to figure out what the student’s needs really are!The Root Cause is so much more than just the test scores or the informal assessment scores you get. Getting to the bottom or root cause of why a student struggles takes a team, an open mind, and time. It's hard finding the one or two things that if you provide interventions or strategies for the student takes off.

My team most works on IEP goals. With the way building schedules have come together, it is all the time we have to work on. We work as a team to find the root cause behind their struggles. This is the process we use to find a student's Root Cause. When we work through a Root Cause Analysis we follow the same steps--make sure you bring an open mind and your data.

Scenario

Problem Statement: The student struggles with decoding.

Formal Reading Assessment

- Alphabet: 63%ile

- Meaning: 2nd%ile

- Reading Quotient: 16th%ile

Based on formal testing the student doesn’t have any decoding concerns but his Reading Comprehension score is significantly below the 12th%ile.

WHY?

I need more information.

DIBLES Scores for a 2nd grader

- Nonsense Word Fluency: 32 sounds; Benchmark 54 sounds in a minute; Gap 1.68

- Phoneme Segmentation Fluency: 47 sounds; Benchmark 40 sounds in a minute; Gap .85

- Oral Reading Fluency: 11 words; Benchmark 52 words in a minute; Gap 4.7

DIBELS shows the student knows their sounds and letters but there is something up with the oral reading fluency. There is a significant gap greater than 2.0.

WHY?

Complete:

- Error Analysis of ORF passage

- Assess sight words

- Does Phonological Processing need to be assessed?

Oral Reading Fluency error analysis shows 68% accuracy with 16 words read.

Assessing sight words show they know 41 of the first 100.

The decision was made based on what looks like a decoding weakness Phonological Processing was assessed--scores were in the average range.

What do I know now?

The student has a decoding weakness. He would benefit from a phonics highly structured phonics program.

Why??

This time I only needed three WHYS to figure out what the true problem is for the student. Sometimes you need more. On average it tends to run closer to five.

This process was completed with my team not during RTI. The decision to target phonics could have been reached without the formal testing and just with DIBELS and Sight Words.

My team uses this approach to help each other when we get stuck and need to take a step back and need more voices to look at the data.

As a special education team, we target only IEP goals and scaffold the student's skills up to access the grade-level curriculum. So the more specific we can be the better--we don’t want to waste time messing around with large messy goals that don’t end up helping the student close achievement gaps.

Go back to RTI.

How could this process be used during an RTI meeting?

Questions and dialogue are key concepts here. Talk about what the numbers tell you. Start with strengths and needs. Just the facts! Don’t interpret anything. Work through the data dialogue process as I outlined in the E-workbook: RTI Data Clarity freebie. I also included several worksheets to help teams work towards finding a student’s root cause.

Working to find the root cause of why a student is struggling is hard work. The dialogue with your team is a great way to bring in more voices. This in turns brings in more ideas that may help the student. Make sure you bring the Data Clarity e-workbook to help.

Do you similar to help your team find a student’s root cause? Feel free to brag about your success in the comments!

Are you wondering how you can use this idea with your team? Check out my free E-Workbook: RTI Data Clarity.

Chat soon,

Intervention Over? Now What?

I started collecting the end of intervention data to review. I want to give you a closer look as to what I do and the decisions I make for the next intervention.

Step 1: Collate your data

If you remember, I get all the data for my interventions on a Google Sheet. (To catch how I set up this intervention click here) I start by going back to my original data and updating it with the new data.

As you can see, I added three new pieces of data, student's new baseline, the new gap, and the raw data change from the baseline.

In this case, I also color-coded the gap information. I did this to better see where the new gaps are and to see how well this intervention worked in closing those gaps.

Each student has their own graph. I also make sure I have up to date graph information.

Each graph has a trendline. By trendlines, I can see who over is activity closing their gaps faster than the goal line.

With these two pieces of data, I can make decisions about next steps.

Step 2: I've Got My Data--Now What

ALWAYS--Stick to FACT based statements, when talking about data. This helps me avoid student specific problems and opinions. (ie; they are slow, they are not working hard etc.)

*Students 1, 2, & 5 have gaps larger than 6.

*Students 1 & 2 had single-digit growth.

*Students 3, 4, 5, 6 had double-digit growth

*Everyone had growth.

*Average growth was 45 up from 32.

The Graphs:

*I look for trends: where is the score is (blue line) related to the trendline (pink line) and the Goal Line (yellow line).

I pay close attention to where these lines meet the Goal (red line). Is it before Week 19 or after?

*This matters, when determining if they are closing their gaps fast enough.

*Remember, the point is to move students more than a year. How long it may take them to close gaps is key to thinking about whether the intervention was successful for the student.

With this intervention, 3 students had great success, 2 students didn't, and 1 who it was moderately successful for.

Step 3: Analyze the Root Cause

(It takes at least five WHYs to get to a root cause) (You may find you need more information like a reading level, fluency data, etc. BUT stick to the FACTS.)

WHY: Student's need more encounters with sight words

WHY: Student's have the easy sight words but don't know what to do with the more difficult ones

WHY: Students are not connecting sight words from text to text

WHY: Sight word knowledge is not carrying over to Grade level Oral Reading Fluency

WHY: Students need more practice besides decodable repeated readings, individual flashcard rings, and instructional book reading.

Analysis:

*Keep intervention structure

*Change up: add extra practice to build the first 50 words

*Keep intervention cycle to 4 weeks

*Ensure Reading Mastery lessons are being completed with fidelity!

To Do over the next 4 weeks:

*Give all students a Phonics screener

*Complete an Error Analysis on Oral Reading Fluency

The why's are always hard but it helps you drill down to what needs to be changed. You also see--I have a list of things I need to do before the next cycle is over. These ideas fell out as I looked at the data--the big wondering "Is this a phonics thing?" Well, I don't have the right data to answer that question. If you find this to be your problem--then figure out your timeline to get the information you need and get it. But don't let it hold you up!! If you missed how I created this intervention you can check it out here.

Here is what the next four weeks of Sight Word Intervention:

*I will add an additional 4 weeks.

*I will add basic sight word books for the 3 students who made little growth.

*I will add exposure to more difficult sight words to all students based on the data from the grade level reading fluency.

*I will have a teammate come and observe a Reading Mastery lesson to ensure fidelity.

I hope you see how I work through my data at the end of an intervention and make changes to support students for the next four weeks. Send a shoutout on how your interventions are going and share any questions.

Chat Soon,

About Me

Resource Library

Thank you! You have successfully subscribed to our newsletter.

.png)